Mount Google Drive

This cell imports the drive library and mounts your Google Drive as a VM local drive. You can access to your Drive files using this path “/content/gdrive/My Drive/”

from google.colab import drive

drive.mount('/content/gdrive')

Create Symbolic link for Google Drive

Execute this for making a Symbolic link

!ln -s "/content/gdrive/My Drive/model_training/detectron2/football-pitch-segmentation" /content/football-pitch-segmentation

Unlink Symbolic link for Google Drive

Execute this for making a Unlink Symbolic link i.e., if the symbolic link you made is incorrect or doesnt work you can remove the link with below line of code

!unlink /content/football-pitch-segmentation

Check GPU & CUDA Versions

Check the version of GPU & CUDA Version being used in the allocated instance in Google Colab

!nvidia-smi

Install Detectron2

Since Detectron2 is not installed by default in Google Colab, we have to install Detectron2 and its dependencies using pip command from the facebooks offical github repository

!python -m pip install 'git+https://github.com/facebookresearch/detectron2.git'

Check CUDA, PyTorch, Detectron2 Versions

Check the versions of:

-

Detectron2

CUDA

PyTorch

import torch, detectron2

!nvcc --version

TORCH_VERSION = ".".join(torch.__version__.split(".")[:2])

CUDA_VERSION = torch.__version__.split("+")[-1]

print("torch: ", TORCH_VERSION, "; cuda: ", CUDA_VERSION)

print("detectron2:", detectron2.__version__)

Import Libraries

Here we import the libraries which we are going to be using in this notebook

- os – Used for making links / paths to save retrive information from directories

- cv2 – Pre process images to feed them into detectron2 for training for example: change BGR to RBG format for training

- cv2_imshow – Used to display images in Google CoLab as regular imshow doesnt work

- register_coco_instances – Register COCO formatted dataset with Detectron2, so that it understands the annotation formats

- DatasetCatalog, MetadataCatalog – Used to load the image data & its Meta data

- Visualizer – Used to display the predicted images with masks

- model_zoo – Access Detectron2 model zoo i.e., the repository where all pre-trained models & weights can be downloaded from

- get_cfg – Package containing the configuration files for each model, used for training the model

- DefaultPredictor – Instantiate a Predictor object, used to make predictions using the trained model

- DefaultTrainer – To train the Detectron2 model

# COMMON LIBRARIES

import os

import cv2

from datetime import datetime

from google.colab.patches import cv2_imshow

# DATA SET PREPARATION AND LOADING

from detectron2.data.datasets import register_coco_instances

from detectron2.data import DatasetCatalog, MetadataCatalog

# VISUALIZATION

from detectron2.utils.visualizer import Visualizer

from detectron2.utils.visualizer import ColorMode

# CONFIGURATION

from detectron2 import model_zoo

from detectron2.config import get_cfg

# EVALUATION

from detectron2.engine import DefaultPredictor

# TRAINING

from detectron2.engine import DefaultTrainer

Check if Everything is Installed Correctly – Run a Pre-trained Detectron2 Model

Before you start training, it’s a good idea to check that everything is working properly. The best way to do this is to perform inference using a pre-trained model.

First, we read the image & display the image on which we are going to perform inferencing

!wget http://images.cocodataset.org/val2017/000000439715.jpg -q -O input.jpg

image = cv2.imread("./input.jpg")

cv2_imshow(image)

Get Configuration File & Predict on Image

In the code below we are doing the following:

- Instantiating a configuration object

- Pulling and merging Mask RCNN config yml file from detectron2 model zoo (the place where all models are stored)

- Setting Region of Interest (ROI) threshold i.e., the score above which we will consider predictions

- Settings Pre Trained Weights – To Mask RCNN weights from Model Zoo

- Predictor – Instantiating a Detectron2 Predictor for inferencing

- Inferencing on a image

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml"))

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml")

predictor = DefaultPredictor(cfg)

outputs = predictor(image)

Check Bounding Box Co-ordinates & Predicted Classes

Check the outputs of the predictions i.e., predicted classes & corresponding bounding box cordinates

print(outputs["instances"].pred_classes)

print(outputs["instances"].pred_boxes)

Visualize Predictions

Get a image with Bounding Boxes, Masks Applied & Class predictions

visualizer = Visualizer(image[:, :, ::-1], MetadataCatalog.get(cfg.DATASETS.TRAIN[0]), scale=1.2)

out = visualizer.draw_instance_predictions(outputs["instances"].to("cpu"))

cv2_imshow(out.get_image()[:, :, ::-1])

Football Dataset

We use `football-pitch-segmentation` dataset as example. This Dataset is in `COCO Segmentation` format.

Structure of your dataset should look like this:

dataset-directory/

├─ README.dataset.txt

├─ README.roboflow.txt

├─ train

│ ├─ train-image-1.jpg

│ ├─ train-image-1.jpg

│ ├─ …

│ └─ _annotations.coco.json

├─ test

│ ├─ test-image-1.jpg

│ ├─ test-image-1.jpg

│ ├─ …

│ └─ _annotations.coco.json

└─ valid

├─ valid-image-1.jpg

├─ valid-image-1.jpg

├─ …

└─ _annotations.coco.json

Get Dataset from Roboflow

Install Roboflow package & download the football-pitch-segmentation dataset

api_key –> Enter the API key which you will get from Roboflow dashboard

!pip install roboflow

from roboflow import Roboflow

rf = Roboflow(api_key="______________")

project = rf.workspace("roboflow-jvuqo").project("football-pitch-segmentation")

dataset = project.version(1).download("coco-segmentation")

Register Dataset with Detectron2

Detectron2 requires you to register a dataset before you actually use it to train a model.

To let detectron2 know how to obtain a dataset named “football-pitch-segmentation”, users need to implement a function that returns the items in your dataset and then tell detectron2 about this function. Below we use detector2’s “register_coco_instances” function which we imported from detectron2.data.datasets to register our dataset

Registering in essence tells Detectron2 how to obtain your dataset.

DATA_SET_NAME = dataset.name.replace(" ", "-")

ANNOTATIONS_FILE_NAME = "_annotations.coco.json"

# TRAIN SET

TRAIN_DATA_SET_NAME = f”{DATA_SET_NAME}-train”

TRAIN_DATA_SET_IMAGES_DIR_PATH = os.path.join(dataset.location, “train”)

TRAIN_DATA_SET_ANN_FILE_PATH = os.path.join(dataset.location, “train”, ANNOTATIONS_FILE_NAME)

register_coco_instances(

name=TRAIN_DATA_SET_NAME,

metadata={},

json_file=TRAIN_DATA_SET_ANN_FILE_PATH,

image_root=TRAIN_DATA_SET_IMAGES_DIR_PATH

)

# TEST SET

TEST_DATA_SET_NAME = f”{DATA_SET_NAME}-test”

TEST_DATA_SET_IMAGES_DIR_PATH = os.path.join(dataset.location, “test”)

TEST_DATA_SET_ANN_FILE_PATH = os.path.join(dataset.location, “test”, ANNOTATIONS_FILE_NAME)

register_coco_instances(

name=TEST_DATA_SET_NAME,

metadata={},

json_file=TEST_DATA_SET_ANN_FILE_PATH,

image_root=TEST_DATA_SET_IMAGES_DIR_PATH

)

# VALID SET

VALID_DATA_SET_NAME = f”{DATA_SET_NAME}-valid”

VALID_DATA_SET_IMAGES_DIR_PATH = os.path.join(dataset.location, “valid”)

VALID_DATA_SET_ANN_FILE_PATH = os.path.join(dataset.location, “valid”, ANNOTATIONS_FILE_NAME)

register_coco_instances(

name=VALID_DATA_SET_NAME,

metadata={},

json_file=VALID_DATA_SET_ANN_FILE_PATH,

image_root=VALID_DATA_SET_IMAGES_DIR_PATH

)

Check if Pre-trained Detectron2 Model is Working

We are going to check if the downloaded Pre-trained Detectron2 Model is working. Before training the model on our dataset we will do a quick check by performing inferencing on a image & see if the model predicts the masks, bounding boxes & classes correctly

Below we download a image from Coco dataset & display it

!wget http://images.cocodataset.org/val2017/000000439715.jpg -q -O input.jpg

image = cv2.imread("./input.jpg")

cv2_imshow(image)

In the code snippet below we do the following:

- cfg – Instantiate a configuration object, this holds the model parameters used for training

- merge_from_file – Populate the configuration object from a yaml file which holds the parameters for the model

- ROI_HEADS.SCORE_THRESH_TEST – Set this to 0.5 or 50%, which tells the model to only consider predictions of 0.5 & above threshold

- WEIGHTS – Check the trained checkpoint i.e., weights to be used for inferencing

- DefaultPredictor – Instantiate a predictor object by passing the configuration object to predictor

- predictor(image) – Pass the image to predictor & get the inferencing outputs

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml"))

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml")

predictor = DefaultPredictor(cfg)

outputs = predictor(image)

Verify if prediction is done correctly by printing tensors which contain the outputs for Predicted Classes & Bounding boxes

print(outputs["instances"].pred_classes)

print(outputs["instances"].pred_boxes)

Check if custom Dataset Registered Correctly

Check if custom dataset was correctly registered using MetadataCatalog

You should see the names the dataset for train, test & validation as below:

[

data_set

for data_set

in MetadataCatalog.list()

if data_set.startswith(DATA_SET_NAME)

]

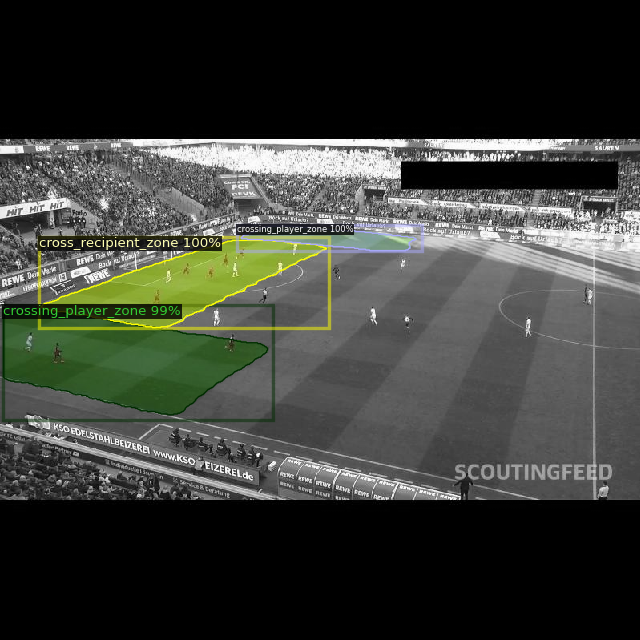

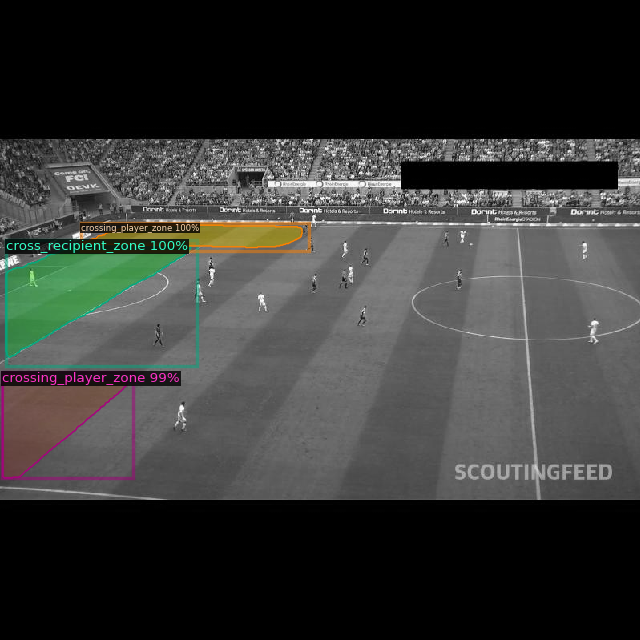

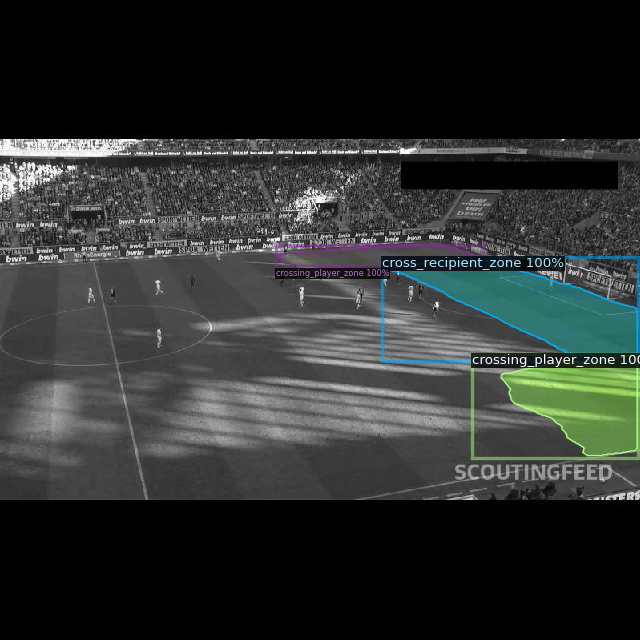

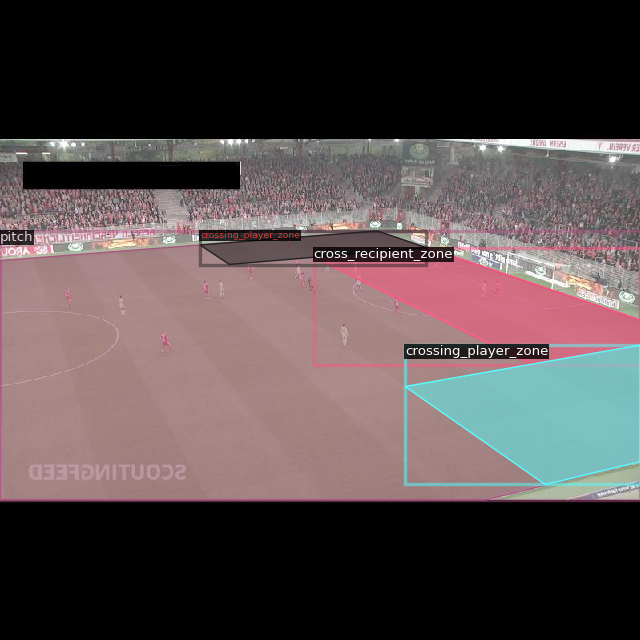

Visualize Training Dataset Image

Lets take a look at one of the training images along with the mask, bounding box & class displayed on the image

metadata = MetadataCatalog.get(TRAIN_DATA_SET_NAME)

dataset_train = DatasetCatalog.get(TRAIN_DATA_SET_NAME)

dataset_entry = dataset_train[0]

image = cv2.imread(dataset_entry[“file_name”])

visualizer = Visualizer(

image[:, :, ::-1],

metadata=metadata,

scale=0.8,

instance_mode=ColorMode.IMAGE_BW

)

out = visualizer.draw_dataset_dict(dataset_entry)

cv2_imshow(out.get_image()[:, :, ::-1])

Train Model – Set Hyperparameters & Output Directory

The below code is to configure the hyperparameters in the configuration file & then to set the output directory. In our case I have set the output directory to a Google Drive location for persistance storage. The storage allocated in Google Colab is temperory & is de allocated as soon as you disconnect from the Colab allocated instance

You also have option to use Google Colab’s temporary storage which is de-allocated as soon as you disconnect the notebook from Colab instance. If you plan to use this then be sure to backup / download the trained model to your computer once training is finished

# HYPERPARAMETERS

#https://github.com/facebookresearch/detectron2/blob/main/configs/COCO-InstanceSegmentation/mask_rcnn_R_101_FPN_3x.yaml

ARCHITECTURE = "mask_rcnn_R_101_FPN_3x"

CONFIG_FILE_PATH = f"COCO-InstanceSegmentation/{ARCHITECTURE}.yaml"

MAX_ITER = 2000

EVAL_PERIOD = 200

BASE_LR = 0.001

NUM_CLASSES = 3

# OUTPUT DIR – Uncomment to use Google Colabs temporarly allocated storage, however you’ll loose trained model once u disconnect from Colab

# OUTPUT_DIR_PATH = os.path.join(

# DATA_SET_NAME,

# ARCHITECTURE,

# datetime.now().strftime(‘%Y-%m-%d-%H-%M-%S’)

# )

# OUTPUT DIR – Customized output dir for GDrive

GDRIVE_PATH = ‘/content/football-pitch-segmentation’

OUTPUT_DIR_PATH = os.path.join(

GDRIVE_PATH,

ARCHITECTURE,

datetime.now().strftime(‘%Y-%m-%d-%H-%M-%S’)

)

os.makedirs(OUTPUT_DIR_PATH, exist_ok=True)

Train Model – Configuration File Setup

We need to provide a configuration file to train the model. In the below code snippet we do the following:

Instantiate a config object

Merge the existing config file into this object

Weights – Provide the path to pre-trained weights

Train Dataset – Set the train dataset

Test Dataset – Set the test dataset

Set other parameters as given below

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file(CONFIG_FILE_PATH))

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url(CONFIG_FILE_PATH)

cfg.DATASETS.TRAIN = (TRAIN_DATA_SET_NAME,)

cfg.DATASETS.TEST = (TEST_DATA_SET_NAME,)

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 64

cfg.TEST.EVAL_PERIOD = EVAL_PERIOD

cfg.DATALOADER.NUM_WORKERS = 2

cfg.SOLVER.IMS_PER_BATCH = 2

cfg.INPUT.MASK_FORMAT='bitmask'

cfg.SOLVER.BASE_LR = BASE_LR

cfg.SOLVER.MAX_ITER = MAX_ITER

cfg.MODEL.ROI_HEADS.NUM_CLASSES = NUM_CLASSES

cfg.OUTPUT_DIR = OUTPUT_DIR_PATH

Training Model

-

DefaultTrainer(cfg) – To train the model you pass the configuration object to the trainer

-

trainer.resume_or_load(resume=False) – You then specify whether to resume training from a checkpoint , if resume=True then training resumes from a checkpoint

-

trainer.train() – Starts the training process

trainer = DefaultTrainer(cfg)

trainer.resume_or_load(resume=False)

trainer.train()

Tensorboard – To Check training progress

Get a graphical representation of training parameters like:

- Total Loss

- Classification Loss

- Learning Rate

- Mask Loss

# Look at training curves in tensorboard:

%load_ext tensorboard

%tensorboard --logdir $OUTPUT_DIR_PATH

Evaluate the Trained Model

Now that the model is trained we evaluate its performance by performing the following steps:

- Load Weights – Populate the configuration file with the path to trained model weights

- Region of Interest (ROI) Threshold – Set the ROI threshold so that only predictions above 0.7 are considered

- Predictor – Instantiate a predictor object by passing the model configuration file to it

cfg.MODEL.WEIGHTS = os.path.join(cfg.OUTPUT_DIR, "model_final.pth")

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.7

predictor = DefaultPredictor(cfg)

Visualize & Evaluate the Trained Model

In the below code we loop through each image file in validation dataset, pass the image to model to make prediction & then visualize the predicted image to check if the predictions are accurate

dataset_valid = DatasetCatalog.get(VALID_DATA_SET_NAME)

for d in dataset_valid:

img = cv2.imread(d[“file_name”])

outputs = predictor(img)

visualizer = Visualizer(

img[:, :, ::-1],

metadata=metadata,

scale=0.8,

instance_mode=ColorMode.IMAGE_BW

)

out = visualizer.draw_instance_predictions(outputs[“instances”].to(“cpu”))

cv2_imshow(out.get_image()[:, :, ::-1])